설치 환경

OS - Ubuntu 20.04.4 LTS

GPU - NVIDIA GeForce RTX 3080

python 3.8.10

tensorflow와 CUDA, cuDNN 호환 버전 확인

https://www.tensorflow.org/install/source#gpu

→ CUDA 11.2, cuDNN 8.1 설치

1. 그래픽 카드 드라이버 설치

# 설치 가능 드라이버 목록 확인

$ ubuntu-drivers devices

== /sys/devices/pci0000:00/0000:00:01.0/0000:01:00.0 ==

modalias : pci:v000010DEd00002206sv000010DEsd00001455bc03sc00i00

vendor : NVIDIA Corporation

driver : nvidia-driver-515 - distro non-free recommended

driver : nvidia-driver-470 - distro non-free

driver : nvidia-driver-510-server - distro non-free

driver : nvidia-driver-510 - distro non-free

driver : nvidia-driver-470-server - distro non-free

driver : nvidia-driver-515-server - distro non-free

driver : xserver-xorg-video-nouveau - distro free builtin# recommended 드라이버 자동 설치

$ sudo ubuntu-drivers autoinstall

# or 원하는 드라이버 설치하고 싶다면 ex) nvidia-driver-515

$ sudo apt install nvidia-driver-515드라이버 설치 후 재부팅

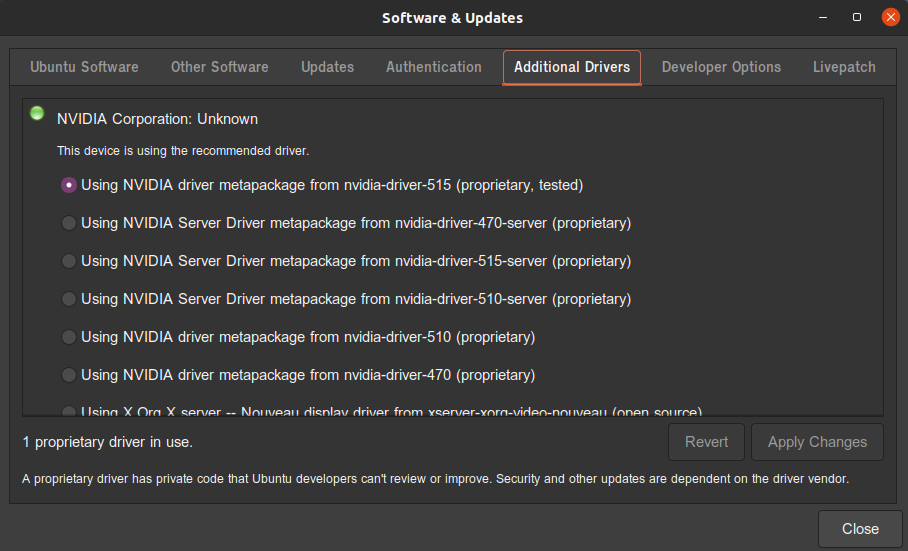

Software & Updates → Additional Drivers에서 nvidia-driver가 체크 되어있는지 확인

드라이버 버전 확인

# 드라이버 버전 확인, 가장 위에 Driver Version

$ nvidia-smi

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 515.65.01 Driver Version: 515.65.01 CUDA Version: 11.7 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 On | N/A |

| 0% 43C P8 14W / 340W | 680MiB / 10240MiB | 1% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| 0 N/A N/A 908 G /usr/lib/xorg/Xorg 35MiB |

| 0 N/A N/A 13349 G /usr/lib/xorg/Xorg 128MiB |

| 0 N/A N/A 13482 G /usr/bin/gnome-shell 35MiB |

| 0 N/A N/A 13918 G ...213092074326858835,131072 61MiB |

| 0 N/A N/A 15560 G ...RendererForSitePerProcess 131MiB |

| 0 N/A N/A 16298 G ...RendererForSitePerProcess 269MiB |

+-----------------------------------------------------------------------------+그래픽 드라이버를 설치하면서 cuda도 같이 설치되니 삭제

# cuda 삭제

$ rm -rf /usr/local/cuda*

# PATH 설정

$ sudo vi /etc/profile

> export PATH=$PATH:/usr/local/cuda-11.0/bin

> export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda-11.0/lib64

> export CUDADIR=/usr/local/cuda-11.02. CUDA 설치

설치

CUDA Toolkit 11.2를 설치

자신에게 맞는 환경 선택 후 아래에 뜨는 명령어 실행

$ wget https://developer.download.nvidia.com/compute/cuda/11.2.0/local_installers/cuda_11.2.0_460.27.04_linux.run

$ sudo sh cuda_11.2.0_460.27.04_linux.run

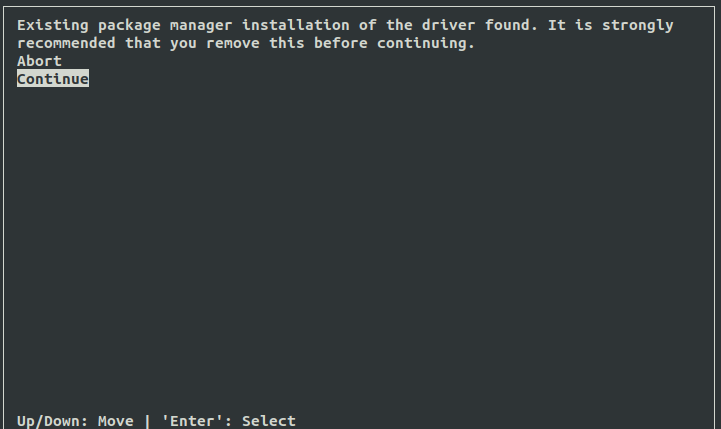

이후 뜨는 창에서 Continue 선택

- CUDA 설치 중 오류

# 오류 발생

> Failed to verify gcc version. See log at /var/log/cuda-installer.log for details.→ gcc가 설치 안돼있으면 뜨는 오류

# gcc 설치

sudo apt-get install gcc설치 후 다시 실행

Continue 선택 → accept 입력 후

드라이버는 이미 설치되어 있으므로 드라이버만 선택 해제 후 Install

환경변수 설정

# 환경 변수 설정

$ sudo sh -c "echo 'export PATH=$PATH:/usr/local/cuda-11.2/bin' >> /etc/profile"

$ sudo sh -c "echo 'export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/cuda-11.2/lib64' >> /etc/profile"

$ sudo sh -c "echo 'export CUDADIR=/usr/local/cuda-11.2' >> /etc/profile"

# 적용

$ source /etc/profile확인

$ nvcc -V

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2020 NVIDIA Corporation

Built on Mon_Nov_30_19:08:53_PST_2020

Cuda compilation tools, release 11.2, V11.2.67

Build cuda_11.2.r11.2/compiler.29373293_03. cuDNN 설치

접속 후 로그인 → Archived cuDNN Releases

Download cuDNN v8.1.0 (January 26th, 2021), for CUDA 11.0,11.1 and 11.2 선택 후 환경에 맞게 다운로드

압축 해제 후 이동

# 압축 해제

$ cd Downloads

$ tar xvzf cudnn-11.2-linux-x64-v8.1.0.77.tgz

# 이동

$ sudo mv cuda/include/cudnn* /usr/local/cuda/include

$ sudo mv cuda/lib64/libcudnn* /usr/local/cuda/lib64

$ sudo chmod a+r /usr/local/cuda/include/cudnn.h /usr/local/cuda/lib64/libcudnn*링크

# 링크

$ sudo ln -sf /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_adv_train.so.8.1.0 /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_adv_train.so.8

$ sudo ln -sf /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8.1.0 /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_ops_infer.so.8

$ sudo ln -sf /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8.1.0 /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_cnn_train.so.8

$ sudo ln -sf /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8.1.0 /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_adv_infer.so.8

$ sudo ln -sf /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_ops_train.so.8.1.0 /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_ops_train.so.8

$ sudo ln -sf /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8.1.0 /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn_cnn_infer.so.8

$ sudo ln -sf /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn.so.8.1.0 /usr/local/cuda-11.2/targets/x86_64-linux/lib/libcudnn.so.8

# 적용

$ sudo ldconfig

#확인

$ ldconfig -N -v $(sed 's/:/ /' <<< $LD_LIBRARY_PATH) 2>/dev/null | grep libcudnn

libcudnn_cnn_infer.so.8 -> libcudnn_cnn_infer.so.8.1.0

libcudnn_cnn_train.so.8 -> libcudnn_cnn_train.so.8.1.0

libcudnn_adv_infer.so.8 -> libcudnn_adv_infer.so.8.1.0

libcudnn_ops_infer.so.8 -> libcudnn_ops_infer.so.8.1.0

libcudnn.so.8 -> libcudnn.so.8.1.0

libcudnn_adv_train.so.8 -> libcudnn_adv_train.so.8.1.0

libcudnn_ops_train.so.8 -> libcudnn_ops_train.so.8.1.04. Tensorflow 설치

위에서 봤듯 CUDA 11.2, cuDNN 8.1, tensorflow-2.7.0이 호환되므로 tensorflow-2.7.0을 설치

# 텐서플로우 설치

pip install tensorflow==2.7.0텐서플로우 GPU 사용하는지 체크

>>> import tensorflow as tftensorflow import 시 오류

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ModuleNotFoundError: No module named 'tensorlofw'

>>> import tensorflow as tf

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/jjaegii/.local/lib/python3.8/site-packages/tensorflow/__init__.py", line 41, in <module>

from tensorflow.python.tools import module_util as _module_util

File "/home/jjaegii/.local/lib/python3.8/site-packages/tensorflow/python/__init__.py", line 41, in <module>

from tensorflow.python.eager import context

File "/home/jjaegii/.local/lib/python3.8/site-packages/tensorflow/python/eager/context.py", line 33, in <module>

from tensorflow.core.framework import function_pb2

File "/home/jjaegii/.local/lib/python3.8/site-packages/tensorflow/core/framework/function_pb2.py", line 16, in <module>

from tensorflow.core.framework import attr_value_pb2 as tensorflow_dot_core_dot_framework_dot_attr__value__pb2

File "/home/jjaegii/.local/lib/python3.8/site-packages/tensorflow/core/framework/attr_value_pb2.py", line 16, in <module>

from tensorflow.core.framework import tensor_pb2 as tensorflow_dot_core_dot_framework_dot_tensor__pb2

File "/home/jjaegii/.local/lib/python3.8/site-packages/tensorflow/core/framework/tensor_pb2.py", line 16, in <module>

from tensorflow.core.framework import resource_handle_pb2 as tensorflow_dot_core_dot_framework_dot_resource__handle__pb2

File "/home/jjaegii/.local/lib/python3.8/site-packages/tensorflow/core/framework/resource_handle_pb2.py", line 16, in <module>

from tensorflow.core.framework import tensor_shape_pb2 as tensorflow_dot_core_dot_framework_dot_tensor__shape__pb2

File "/home/jjaegii/.local/lib/python3.8/site-packages/tensorflow/core/framework/tensor_shape_pb2.py", line 36, in <module>

_descriptor.FieldDescriptor(

File "/home/jjaegii/.local/lib/python3.8/site-packages/google/protobuf/descriptor.py", line 560, in __new__

_message.Message._CheckCalledFromGeneratedFile()

TypeError: Descriptors cannot not be created directly.

If this call came from a _pb2.py file, your generated code is out of date and must be regenerated with protoc >= 3.19.0.

If you cannot immediately regenerate your protos, some other possible workarounds are:

1. Downgrade the protobuf package to 3.20.x or lower.

2. Set PROTOCOL_BUFFERS_PYTHON_IMPLEMENTATION=python (but this will use pure-Python parsing and will be much slower).

More information: https://developers.google.com/protocol-buffers/docs/news/2022-05-06#python-updates→ protobuf를 다운그레이드 해야함

>>> tf.config.list_physical_devices('GPU')

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]NUMA node 오류

>>> tf.config.list_physical_devices('GPU')

2022-08-04 10:40:37.804158: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:939] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-08-04 10:40:37.866573: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:939] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2022-08-04 10:40:37.866819: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:939] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

[PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]→ NUMA(Non-Uniformed Memory Access) 오류가 뜬다

[문제해결] NUMA node read from SysFS had negative value -1

해당 링크로 들어가서 해결할 수 있다.

만약 장치명이NVIDIA Corporation GA102 [GeForce RTX 3080]이 아닌 Device 2206이라고 뜬다면 장치명 업데이트가 필요하다.

# nvidia 장치명 업데이트

$ sudo update-pciids